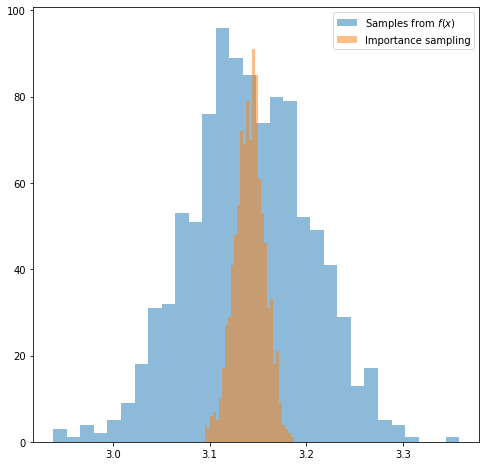

A Quick Introduction to Importance Sampling

You might have encountered the term importance sampling in a machine learning paper. This post provides a quick hands-on introduction to this topic. Hopefully after reading it you will have know understand how to use this technique in practice.

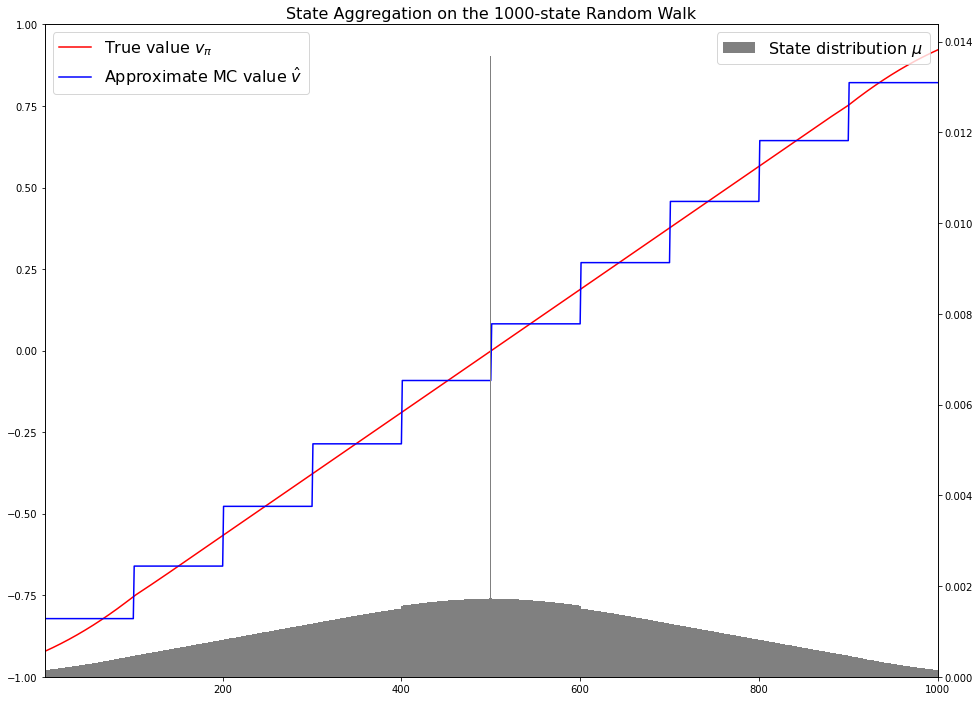

Gradient Monte Carlo for Value Function Approximation (RL S&B Example 9.1)

In this post we will implement Example 9.1 from Chapter 9 Reinforcement Learning (Sutton and Barto). This is an example of on-policy prediction with approximation which means that you try to approximate the value function for the policy you are following right now. We will be using Gradient Monte Carlo for approximating $v_\pi(s)$

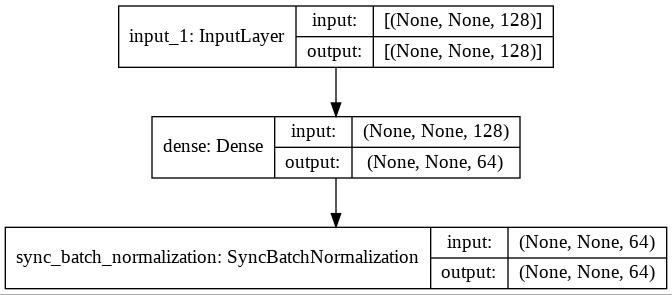

Distributed BatchNorm in TensorFlow

In this post we will see an example of how BatchNorm works when running distributed training with TensorFlow using TPUs and tf.keras.layers. This post assumes you know BatchNormalisation works.

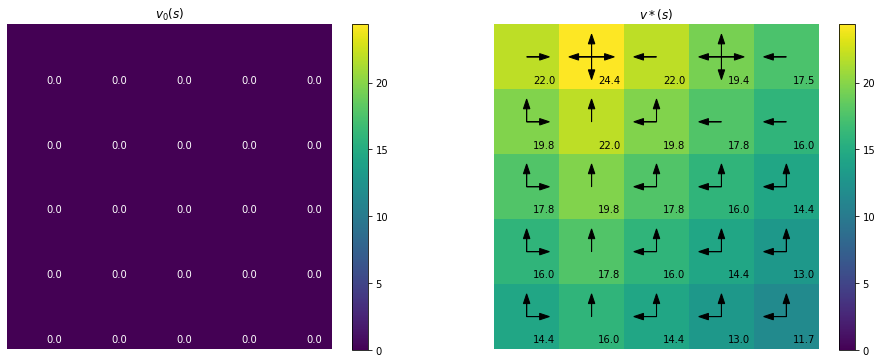

Vectorising the Bellman equations (RL S&B Examples 3.5, 3.8)

In this blog post we will reproduce Examples 3.5 and 3.8 in Reinforcement Learning (Sutton and Barto) involving the Bellman equation. This post presumes a basic understanding of reinforcement learning in particular policy and value and will just outline the theory that is needed in order to implement the examples.

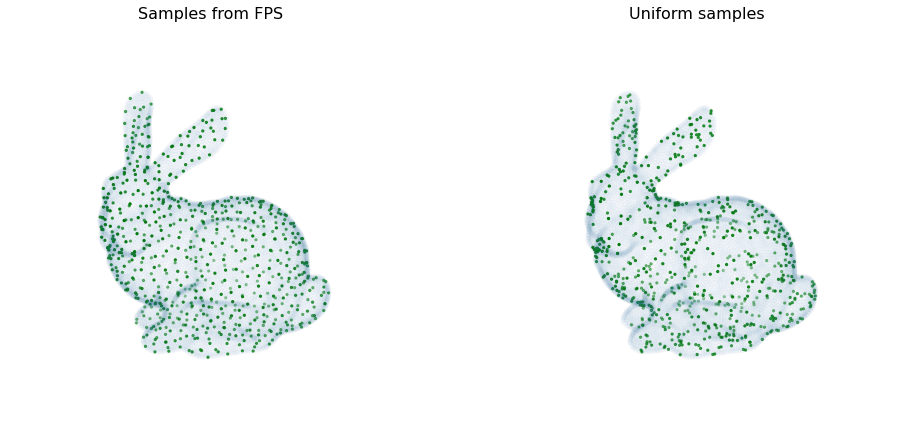

Furthest Point Sampling

I have come across an algorithm named furthest point sampling or sometimes farthest point sampling a few times in deep learning papers dealing with point cloud data. However there is a lack of resources online explaining what this algorithm actually does. Here I will try to explain it with reference to this CUDA implementation. Along the way we will also see how to implement it in an efficient parallelised way.

Variational Auto-Encoder - Part 2 / Implementing a simple model

Introduction

In the previous post we motivated the idea of a Variational Auto Encoder. Here we will have a go at implementing a very simple model to get a sense of all the components steps involved.

Variational Auto-Encoder - Part 1 / Introduction

Consider this painting by Vincent Van Gogh known as “Wheatfield with Crows”. Suppose you want to learn a probability distribution over Van Gogh’s paintings. What the computer or a deep learning algorithm sees when it looks at this painting is a grid of RGB pixel intensities. So one approach might be to model the data as a sequence of individual pixels. Yet that is manifestly not how the artist has constructed the painting.

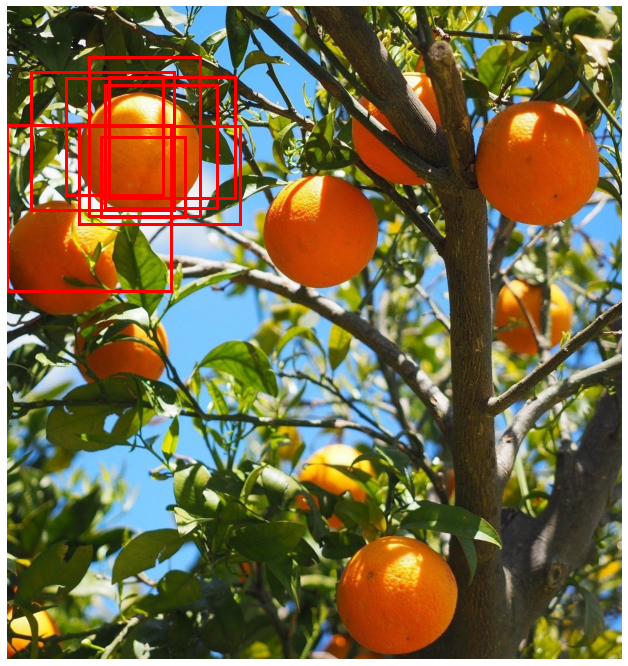

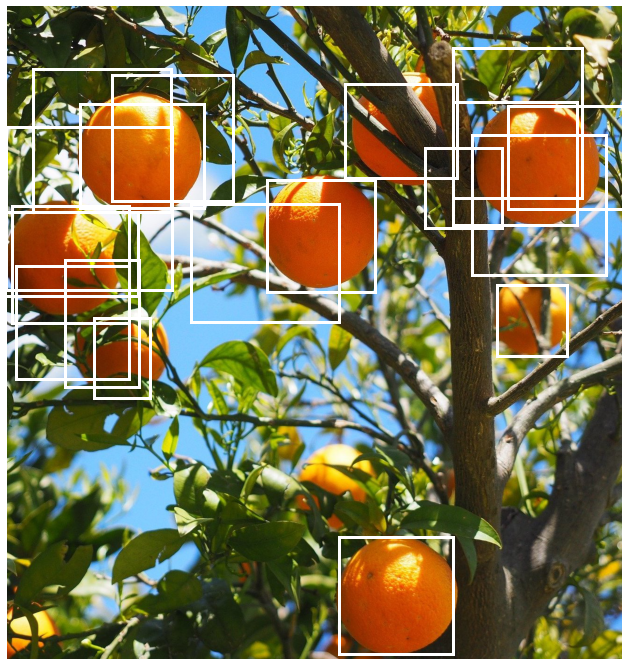

Generating crops from images

This post explains how to implement a function to generate a crop of a particular object from an image given the position of the centroid of the object. The images below show an example use case: cropping all the individual oranges from an image.

Non-maximum suppression (NMS)

This blogpost contains a quick practical introduction to a popular post-processing clustering algorithm used in object detection models. The code used to generate the figures and the demo can be found in this Colab notebook.

Vectorizing Intersection over Union (IoU)

Supporting batched data is an important requirement for a deep learning pipeline to enable time efficient model training. Often when writing a function, the easiest way to start is to first handle one element and then use a for loop over all the elements in the batch.